There are two big categories of changes in here

- Removing lifetimes from common traits that can essentially never user a lifetime from an input (particularly `Drop` & `Debug`)

- Forwarding impls that are only possible because the lifetime doesn't matter (like `impl<R: Read + ?Sized> Read for &mut R`)

I omitted things that seemed like they could be more controversial, like the handful of iterators that have a `Item: 'static` despite the iterator having a lifetime or the `PartialEq` implementations where the flipped one cannot elide the lifetime.

Fixed the link to the ? operator

I'm working on updating all broken links, but figured I'd break up the pull requests so they are easier to review, versus just one big pull request.

Defactored Bytes::read

Removed unneeded refactoring of read_one_byte, which removed the unneeded dynamic dispatch (`dyn Read`) used by that function.

This function is only used in one place in the entire Rust codebase; there doesn't seem to be a reason for it to exist (and there especially doesn't seem to be a reason for it to use dynamic dispatch)

The std::io::read main documentation can lead to error because the

buffer is prefilled with 10 zeros that will pad the response.

Using an empty vector is better.

The `read_to_end` documentation is already correct though.

This is my first rust PR, don't hesitate to tell me if I did something

wrong.

Optimize `read_to_end`.

This patch makes `read_to_end` use Vec's memory-growth pattern rather

than using a custom pattern.

This has some interesting effects:

- If memory is reserved up front, `read_to_end` can be faster, as it

starts reading at the buffer size, rather than always starting at 32

bytes. This speeds up file reading by 2x in one of my use cases.

- It can reduce the number of syscalls when reading large files.

Previously, `read_to_end` would settle into a sequence of 8192-byte

reads. With this patch, the read size follows Vec's allocation

pattern. For example, on a 16MiB file, it can do 21 read syscalls

instead of 2057. In simple benchmarks of large files though, overall

speed is still dominated by the actual I/O.

- A downside is that Read implementations that don't implement

`initializer()` may see increased memory zeroing overhead.

I benchmarked this on a variety of data sizes, with and without

preallocated buffers. Most benchmarks see no difference, but reading

a small/medium file with a pre-allocated buffer is faster.

show in docs whether the return type of a function impls Iterator/Read/Write

Closes#25928

This PR makes it so that when rustdoc documents a function, it checks the return type to see whether it implements a handful of specific traits. If so, it will print the impl and any associated types. Rather than doing this via a whitelist within rustdoc, i chose to do this by a new `#[doc]` attribute parameter, so things like `Future` could tap into this if desired.

### Known shortcomings

~~The printing of impls currently uses the `where` class over the whole thing to shrink the font size relative to the function definition itself. Naturally, when the impl has a where clause of its own, it gets shrunken even further:~~ (This is no longer a problem because the design changed and rendered this concern moot.)

The lookup currently just looks at the top-level type, not looking inside things like Result or Option, which renders the spotlights on Read/Write a little less useful:

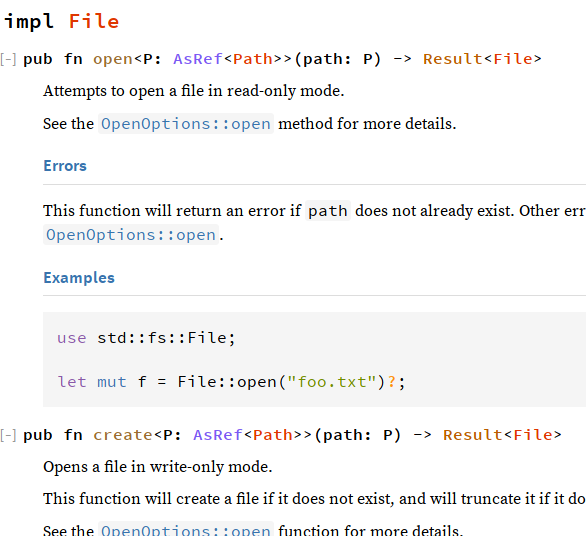

<details><summary>`File::{open, create}` don't have spotlight info (pic of old design)</summary>

</details>

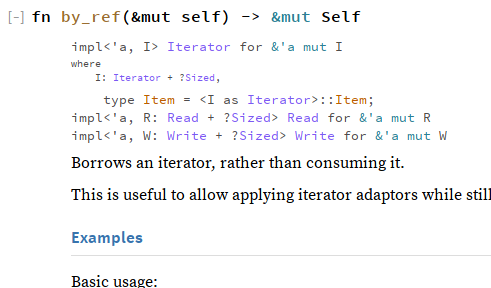

All three of the initially spotlighted traits are generically implemented on `&mut` references. Rustdoc currently treats a `&mut T` reference-to-a-generic as an impl on the reference primitive itself. `&mut Self` counts as a generic in the eyes of rustdoc. All this combines to create this lovely scene on `Iterator::by_ref`:

<details><summary>`Iterator::by_ref` spotlights Iterator, Read, and Write (pic of old design)</summary>

</details>

This patch makes `read_to_end` use Vec's memory-growth pattern rather

than using a custom pattern.

This has some interesting effects:

- If memory is reserved up front, `read_to_end` can be faster, as it

starts reading at the buffer size, rather than always starting at 32

bytes. This speeds up file reading by 2x in one of my use cases.

- It can reduce the number of syscalls when reading large files.

Previously, `read_to_end` would settle into a sequence of 8192-byte

reads. With this patch, the read size follows Vec's allocation

pattern. For example, on a 16MiB file, it can do 21 read syscalls

instead of 2057. In simple benchmarks of large files though, overall

speed is still dominated by the actual I/O.

- A downside is that Read implementations that don't implement

`initializer()` may see increased memory zeroing overhead.

I benchmarked this on a variety of data sizes, with and without

preallocated buffers. Most benchmarks see no difference, but reading

a small/medium file with a pre-allocated buffer is faster.

Remove sometimes in std::io::Read doc

We use it immediately in the next sentence, and the word is filler.

A different conversation to make is whether we want to call them Readers in the documentation at all. And whether it's actually called "Readers" elsewhere.